Recently, OpenAI has been releasing AI-powered tools that are mind-blowing. Now, they have made a new discovery that will give 3D artists a run for their money.

If you have enjoyed using DALL-E, then you sure will love using Point-E because it takes it up a notch by generating 3D images.

Brief background on DALL-E

If you haven’t heard of DALL-E, it’s another tool from OpenAI that works by taking in the user’s text prompts and creating a 2D image of them. It does this by utilizing two techniques: Clip and Diffusion. The first step is the main brain of the operation since it makes use of an ML-trained model to find the closest matches to things mentioned in prompts and “clips” them all together. The next step is to “diffuse” the images together into a 2D image that is representative of the prompt.

In addition to making images from scratch that have never been seen before, it can also change images and even blend new images into old ones. It has already taken the world of art by storm. While it has attracted a lot of controversy, it is indeed a powerful tool that has opened a world of possibilities for artists and non-artists alike.

The new and improved Point-E

Point-E takes this to the next level by creating 3D objects out of prompts. It is an AI-based text-to-3D model generator, and it uses generative image models.

So, it’s a two-step process. First, it takes text prompts from the user and uses natural language processing (NLP) to interpret them. It uses a 3-billion parameter-based GLIDE model to create a 2D image. This approach is similar to that of DALL-E, called a “synthetic” view.

Second, it picks a 3D object that looks most like a 2D image by using an ML model that has been trained on millions of 3D objects that have already been labeled. It uses a stack of diffusion models to create a 3D RGB point cloud map of discrete points that gives a rough representation of the object wanted by the user.

The point cloud map is not the final product, as there’s still some work to be done on the model. A regression-based approach is used to create a mesh that finally gives a more refined product.

Point-E represents OpenAI’s take on diffusion-based models first proposed by Sohl-Dickstein in 2015.

What sets Point-E apart from other models?

This might sound similar to Google’s next-generation DreamFusion tool or Nvidia’s Magic 3D, AI-based 3D generator tools that also convert text prompts into 3D representations, but there are quite a few differences between them.

DreamFusion creates high-definition NeRFs, whereas Point-E creates point maps that can be customized to meet specific requirements. However, NeRFs take longer to produce and require significantly more hardware resources.

Point-E, on the other hand, is said to be 600 times faster and can make 3D models in minutes using a single low-power Nvidia V100 GPU. This makes it a much more efficient solution.

How can I use Point-E?

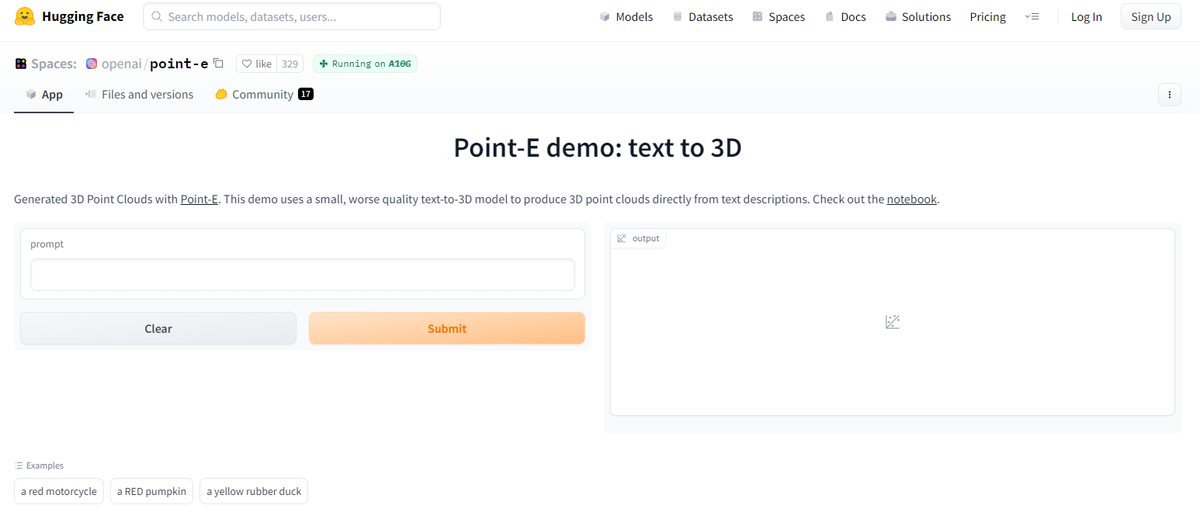

While there isn’t a proper official platform, like ChatGPT’s website, to take Point-E out for a spin, there is a website called Hugging Face that has made a user-friendly demo that can generate the final version of the 3D objects.

With a bit of programming knowledge, one could also modify the 3D tool to their own needs using the open-source code that can be found on OpenAI’s Github.

How is Point-E used?

Moving on from how it works, let’s dive into how it is used. For starters, it will be a huge help to those in the 3D art industry.

Professionals in the 3D animation and game development industries will greatly benefit from this tool. Characters, environments, and other things in games are often modeled in 3D, which takes a lot of time and money. But if developers had an AI text-to-3D image generation tool, they could just write a description of the object they wanted, and the tool would automatically make a high-quality 3D model.

This can make a big difference in how much time and effort they have to spend on their work. This can lead to better results because they will be able to spend more time refining and have more freedom to be creative.

This will pave the way for higher quality and better work flexibility for 3D artists. In addition, this technology could also be used in the field of product design, allowing for faster prototyping and product visualization. It could also be used in animation to make 3D models quickly for movies and other animated content.

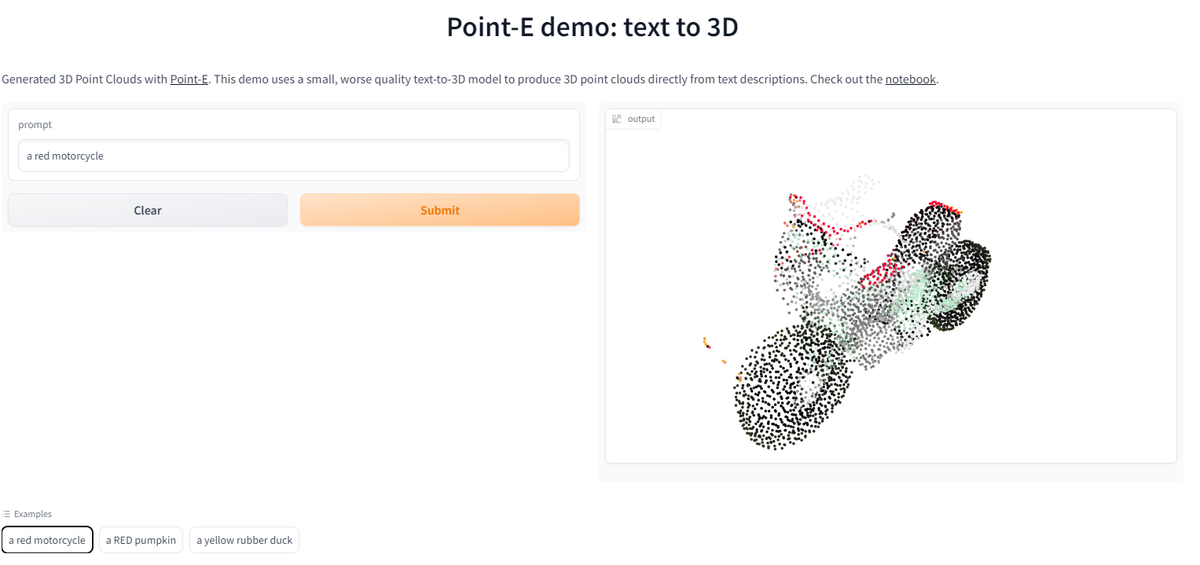

As you can see, this 3D image is not as sophisticated as you expected. This model takes A LOT of resources and the free ones will be limited in their output! This will surely improve over time!

Will Point-E pose a threat to artists?

The obvious question that arises then is, of course, the threat that these AI-based tools pose to professionals. Its ability to quickly and easily make 3D models from text descriptions could save time and resources and give people new ways to improve their fields.

For example, there was a recent surge in hatred against AI when an image generated using DALL-E 2 was submitted and won in an art competition. Several artists reacted to this and started a trend with #notoaiart.

On the other hand, the use of ChatGPT to debug and even write code from scratch came as a big blow to newbie programmers. When spammy answers written in ChatGPT started showing up on Stack Overflow, things got worse. As a result, Stack Overflow banned answers written in ChatGPT.

While the threat is real, it is worth noting that tools like DALL-E and ChatGPT, if used correctly, can serve as great assets for artists and programmers, be it to improve their work or fix mistakes.

It depends on the user if they choose to use it malevolently or with the right mindset. Overall, the AI text-to-3D image generation tool has a lot of potential to make a lot of industries more efficient and creative.